Cloud API

Real-time Speech Recognition Cloud API

1 Feature Introduction

For long-duration speech data streams (up to 37 hours), suitable for scenarios requiring continuous recognition over extended periods, such as conference speeches and live video streaming.

Real-time speech recognition only provides a WebSocket (streaming) interface.

2 Request URL

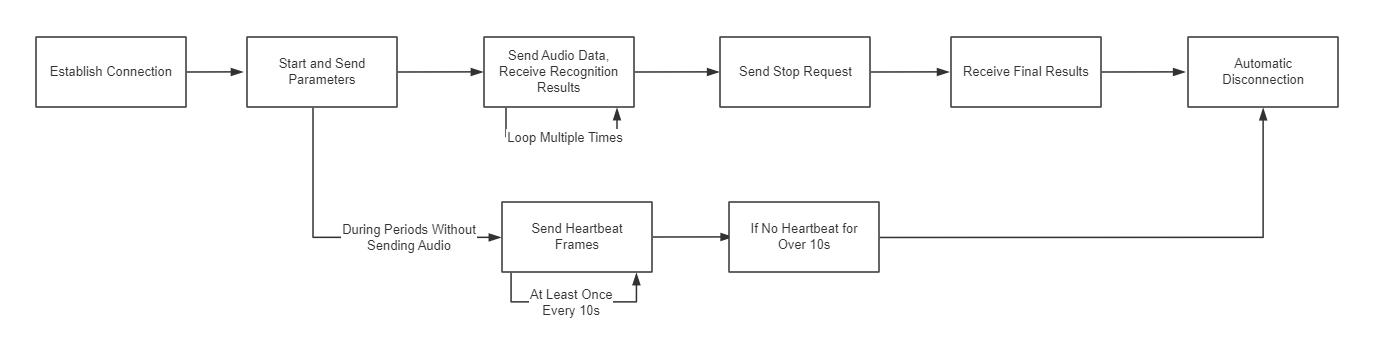

wss://api.voice.dolphin-ai.jp/v1/asr/ws3 Interaction Process

1. Authorization

When the client establishes a WebSocket connection with the server, the following request header need to be set:

| Name | Type | Required | Description |

|---|---|---|---|

| Authorization | String | Yes | Standard HTTP header for setting authorization information. The format must be the standard Bearer ${Token} form (note the space after "Bearer"). |

For authorization-related operations, please refer to Authorization and Access Permissions.

2. Start and Send Parameters

A request is sent from the client, and the server confirms the validity of the request. Parameters must be set within the request message.

Request parameters (header object):

| Parameter | Type | Required | Description |

|---|---|---|---|

| namespace | String | Yes | The namespace to which the message belongs: SpeechTranscriber indicates real-time speech recognition |

| name | String | Yes | Event name: StartTranscription indicates the start phase |

Request parameters (payload object):

| Parameter | Type | Required | Description | Default Value |

|---|---|---|---|---|

| lang_type | String | Yes | Language option, refer to Developer Guides - Language Support | Required |

| format | String | No | Audio encoding format, refer to Developer Guides - Audio Encoding | pcm |

| sample_rate | Integer | No | Audio sampling rate, refer to Developer Guides - Basic Terms When sample_rate=‘8000’ field parameter field is required, and field=‘call-center’ | 16000 |

| enable_intermediate_result | Boolean | No | Whether to return intermediate recognition results | true |

| enable_punctuation_prediction | Boolean | No | Whether to add punctuation in post-processing | true |

| enable_inverse_text_normalization | Boolean | No | Whether to perform ITN in post-processing, refer to Developer Guides - Basic Terms | true |

| max_sentence_silence | Integer | No | Speech sentence breaking detection threshold. Silence longer than this threshold is considered as a sentence break. The valid parameter range is 200~1200. Unit: Milliseconds | sample_rate=16000:800 sample_rate=8000:250 |

| enable_words | Boolean | No | Whether to return word information, refer to Developer Guides - Basic Terms | false |

| enable_intermediate_words | Boolean | No | Whether to return intermediate result word information, refer to Developer Guides - Basic Terms | false |

| enable_modal_particle_filter | Boolean | No | Whether to enable modal particle filtering, refer to Developer Guides - Basic Terms | true |

| hotwords_list | List<String> | No | One-time hotwords list, effective only for the current connection. If both hotwords_list and hotwords_id parameters exist, hotwords_list will be used. Up to 100 entries can be provided at a time. Refer to Developer Guides - Practical Features. | None |

| hotwords_id | String | No | Hotwords ID, refer to Developer Guides - Practical Features | None |

| hotwords_weight | Float | No | Hotwords weight, the range of values [0.1, 1.0] | 0.4 |

| correction_words_id | String | No | Forced correction vocabulary ID, refer to Developer Guides - Practical Features Supports multiple IDs, separated by a vertical bar |; all indicates using all IDs. | None |

| forbidden_words_id | String | No | Forbidden words ID, refer to Developer Guides - Practical Features Supports multiple IDs, separated by a vertical bar |; all indicates using all IDs. | None |

| field | String | No | Field general: supports the sample_rate of 16000Hz call-center: supports the sample_rate of 8000Hz | None |

| audio_url | String | No | Returned audio format (stored on the platform for only 30 days) mp3: Returns a url for the audio in mp3 format pcm: Returns a url for the audio in pcm format wav: Returns a url for the audio in wav format | None |

| connect_timeout | Integer | No | Connection timeout (seconds), range: 5-60 | 10 |

| gain | Integer | No | Amplitude gain factor, range [1, 20], refer to Developer Guides - Practical Features 1 indicates no amplification, 2 indicates the original amplitude doubled (amplified by 1 times), and so on | sample_rate=16000:1 sample_rate=8000:2 |

| user_id | String | No | Custom user information, which will be returned unchanged in the response message, with a maximum length of 36 characters | None |

| enable_lang_label | Boolean | No | Return language code in recognition results when switching languages, only effective for Japanese-English and Chinese-English mixed languages. Note: Enabling this feature may cause a response delay when switching languages | false |

| paragraph_condition | Integer | No | Return a new paragraph number in the next sentence within the same speaker_id when the set character count is reached, range [100, 2000], values outside the range indicate that this feature is not enabled | 0 |

| enable_save_log | Boolean | No | Provide log of audio data and recognition results to help us improve the quality of our products and services | true |

| enable_spoken | Boolean | No | When enabled, the sentence information will increase the return of pronunciation. Note: This feature currently only supports Japanese and is not available for other languages. | false |

| enable_dynamic_break | Boolean | No | When enabled, it will adaptively adjust the sentence-breaking effect based on the speaking rate and the maximum silence duration parameter (max_sentence_silence). | false |

| enable_speaker_label | Boolean | No | Whether to activate real-time role distinction. When activated, the label tag will be returned in the word information to display role information. Note: This takes effect only when the word information return parameter is enabled. Intermediate result word information parameter: enable_intermediate_words; Final result word information parameter: enable_words. | false |

Example of a request:

{

"header": {

"namespace": "SpeechTranscriber",

"name": "StartTranscription"

},

"payload": {

"lang_type": "ja-JP",

"format": "pcm",

"sample_rate": 16000,

"enable_words":true

}

}Response parameters (header object):

| Parameter | Type | Description |

|---|---|---|

| namespace | String | The namespace to which the message belongs: SpeechTranscriber indicates real-time speech recognition |

| name | String | Event name: TranscriptionStarted indicates the start phase |

| status | String | Status code |

| status_text | String | Status code description |

| task_id | String | The globally unique ID for the task; please record this value for troubleshooting |

Example of a response:

{

"header":{

"namespace": "SpeechTranscriber",

"name": "TranscriptionStarted",

"status": "000000",

"status_text": "success",

"app_id": "2362af9b-7302-4e07-89c5-oc5ac0358b8a",

"task_id": "ffae0288-eb2c-4u58-9482-a04c96d291a9",

"message_id": "b8ba637e-289c-4947-a303-88a283e7b4aa"

},

"payload":{

"index":0,

"time":0,

"begin_time":0,

"speaker_id":"",

"result":"",

"words":null

}

}3. Send Audio Data and Receive Recognition Results

Send audio data in a loop and continuously receive recognition results. It is recommended to send data packets of 7680 Bytes each time.

The real-time speech recognition service has an automatic sentence-breaking feature that determines the beginning and end of a sentence according to the length of silence between the utterances, which is represented by events in the returned results. The SentenceBegin and SentenceEnd events respectively indicate the start and end of a sentence, while the TranscriptionResultChanged event indicates the intermediate recognition results of a sentence.

SentenceBegin Event

The SentenceBegin event indicates that the server has detected the beginning of a sentence.

Response parameters (header object):

| Parameter | Type | Description |

|---|---|---|

| namespace | String | The namespace to which the message belongs: SpeechTranscriber indicates real-time speech recognition |

| name | String | Message name: SentenceBegin indicates the beginning of a sentence |

| status | Integer | Status code, indicating whether the request was successful, refer to service status codes |

| status_text | String | Status message |

| app_id | String | Application ID |

| task_id | String | The globally unique ID for the task; please record this value for troubleshooting |

| message_id | String | The ID for this message |

Response parameters (payload object):

| Parameter | Type | Description |

|---|---|---|

| index | Integer | Sentence number, starting from 1 and incrementing |

| time | Integer | The duration of the currently processed audio, in milliseconds |

| begin_time | Integer | The time corresponding to the SentenceBegin event for the current sentence, in milliseconds |

| speaker_id | String | Speaker number, refer to the section 'Additional Features - Speaker ID' below |

| result | String | Recognition result, which may be empty |

| confidence | Float | The confidence level of the current result, in the range [0, 1] |

| words | List<Word> | Always null |

Example of a response:

{

"header": {

"namespace": "SpeechTranscriber",

"name": "SentenceBegin",

"status": "000000",

"status_text": "success",

"app_id": "2362af9b-7302-4e07-89c5-oc5ac0358b8a",

"task_id": "ffae0288-eb2c-4u58-9482-a04c96d291a9",

"message_id": "82e8b452-47a8-44ab-aa68-dd51ef803d89"

},

"payload": {

"index": 1,

"begin_time": 2640,

"time": 3280,

"speaker_id": "",

"result": "The",

"words": []

}

}TranscriptionResultChanged Event

Recognition results are divided into "intermediate results" and "final results". For details, refer to the Developer Guides - Basic Terms.

The TranscriptionResultChanged event indicates the change in recognition results, i.e., the intermediate results of a sentence.

-

If

enable_intermediate_resultis set totrue, the server will return multipleTranscriptionResultChangedmessages, i.e., intermediate recognition results. -

If

enable_intermediate_resultis set tofalse, the server will not return any messages for this step.

SentenceEnd event as the final recognition result.Response parameters (header object):

The header object parameters are the same as in the previous table (see the SentenceBegin event header object return parameters), with name being TranscriptionResultChanged indicating the intermediate recognition result of a sentence.

Response parameters (payload object):

| Parameter | Type | Description |

|---|---|---|

| index | Integer | Sentence number, starting from 1 and incrementing. |

| time | Integer | The duration of the currently processed audio, in milliseconds. |

| begin_time | Integer | The time corresponding to the SentenceBegin event for the current sentence, in milliseconds. |

| result | String | The recognition result of this sentence. |

| confidence | Float | The confidence level of the current result, in the range [0, 1]. |

| words | Dict[] | The intermediate result word information of this sentence It will only be returned when enable_intermediate_words is set to true. |

Within it, the intermediate result word information words object:

| Parameter | Type | Description |

|---|---|---|

| word | String | Text |

| start_time | Integer | The start time of the word, in milliseconds |

| end_time | Integer | The end time of the word, in milliseconds |

| confidence | Float | The confidence level of the current result, in the range [0, 1]. |

| label | String | Speaker numbers increase sequentially starting from speaker_1. If the label is the same, it indicates the same speaker.Results are returned only when enable_speaker_label is set to true. |

Example of a response:

{

"header": {

"namespace": "SpeechTranscriber",

"name": "TranscriptionResultChanged",

"status": "000000",

"status_text": "success",

"app_id": "2362af9b-7302-4e07-89c5-oc5ac0358b8a",

"task_id": "ffae0288-eb2c-4u58-9482-a04c96d291a9",

"message_id": "1814d9f6-12da-44c1-8db5-ddc775773b85"

},

"payload": {

"index": 1,

"begin_time": 2640,

"time": 3920,

"result": "The weather",

"words": [

{

"word": "The",

"start_time": 2700,

"end_time": 3320,

"confidence": 0.96875696,

"label": "speaker_1"

},

{

"word": "weather",

"start_time": 3450,

"end_time": 4090,

"confidence": 0.87596966,

"label": "speaker_1"

}

]

}

}SentenceEnd Event

The SentenceEnd event indicates that the server has detected the end of a sentence and returns the final recognition result of that sentence.

Response parameters (header object):

The header object parameters are the same as in the previous table (see the SentenceBegin event header object return parameters). name is SentenceEnd, indicating that the end of a sentence has been recognized.

Response parameters (payload object):

| Parameter | Type | Description |

|---|---|---|

| index | Integer | Sentence number, starting from 1 and incrementing |

| time | Integer | The duration of the currently processed audio, in milliseconds |

| begin_time | Integer | The time corresponding to the SentenceBegin event for the current sentence, in milliseconds |

| speaker_id | String | Speaker number, refer to the section 'Additional Features - Speaker ID' below |

| result | String | The recognition result of this sentence |

| confidence | Float | The confidence level of the current result, in the range [0, 1]. |

| words | Dict[] | The final result word information of this sentence. It will be only returned when enable_words is set to true. |

Within it, the word information words object:

| Parameter | Type | Description |

|---|---|---|

| word | String | Text |

| start_time | Integer | The start time of the word, in milliseconds |

| end_time | Integer | The end time of the word, in milliseconds |

| type | String | Typenormal indicates regular text, forbidden indicates forbidden words, modal indicates modal particles (not returned if enable_modal_particle_filter is set to true), punc indicates punctuation marks |

| confidence | Float | The confidence level of the current result, in the range [0, 1]. |

| label | String | Speaker numbers increase sequentially starting from speaker_1. If the label is the same, it indicates the same speaker.Results are returned only when enable_speaker_label is set to true. |

Example of a response:

{

"header": {

"namespace": "SpeechTranscriber",

"name": "SentenceEnd",

"status": "000000",

"status_text": "success",

"app_id": "2362af9b-7302-4e07-89c5-oc5ac0358b8a",

"task_id": "ef81e8a0-953b-4793-bf5b-f98323360936",

"message_id": "e7c4b784-897a-4374-ba7b-696f62fd5d29"

},

"payload": {

"index": 1,

"begin_time": 2640,

"time": 6965,

"speaker_id": "",

"result": "The weather is nice, so let's go for a walk.",

"words": [

{

"word": "The",

"type": "normal",

"start_time": 2700,

"end_time": 3320,

"confidence": 0.96875696,

"label": "speaker_1"

},

{

"word": "weather",

"type": "normal",

"start_time": 3450,

"end_time": 4090,

"confidence": 0.87596966,

"label": "speaker_1"

},

{

"word": "is",

"type": "normal",

"start_time": 4090,

"end_time": 4480,

"confidence": 0.97566869,

"label": "speaker_1"

},

{

"word": "nice",

"type": "normal",

"start_time": 4480,

"end_time": 4630,

"confidence": 0.98766965,

"label": "speaker_1"

},

{

"word": ",",

"type": "punc",

"start_time": 4680,

"end_time": 4680,

"confidence": 1,

"label": "speaker_1"

},

……more……

]

}

}Additional Feature

The following additional feature is only available in real-time speech recognition.

Forced Sentence Breaking

During the transmission of audio data, sending a SentenceEnd event will force the server to break the sentence at the current position. After the server processes the sentence breaking, the client will receive a SentenceEnd message with the final recognition result for that sentence.

Example of a request:

{

"header": {

"namespace": "SpeechTranscriber",

"name": "SentenceEnd"

}

}4. Maintain Connection (Heartbeat Mechanism)

When not sending audio, it is necessary to send at least one heartbeat packet every 10 seconds, or the connection will be automatically disconnected. Clients are recommended to send a heartbeat every 8 seconds.

Example of a request:

{

"header":{

"namespace":"SpeechTranscriber",

"name":"Ping"

}

}Example of a response:

{

"header": {

"namespace": "SpeechTranscriber",

"name": "Pong",

"status": "000000",

"status_text": "success",

"app_id": "f600aa9a-b117-4c62-ab7c-c7d9e6713a6f",

"task_id": "c7dd0acd-b47c-4ea7-a772-3d2cae15dbf2",

"message_id": "f013e785-238e-402d-ad60-81cd59566391"

},

"payload": {

"index": 0,

"begin_time": 0,

"time": 0,

"speaker_id": "",

"result": "",

"words": null

}

}If no data is transmitted to the server within 10 seconds, the server will return an error message and then automatically disconnect the connection.

5. Stop and Retrieve Final Results

The client sends a request to stop real-time speech recognition, notifying the server that the transmission of audio data has ended and to terminate speech recognition. The server returns the final recognition result and then automatically disconnects the connection.

Request parameters (header object):

| Parameter | Type | Description |

|---|---|---|

| namespace | String | The namespace to which the message belongs. SpeechTranscriber indicates real-time speech recognition |

| name | String | Message name. StopTranscription indicates terminating real-time speech recognition |

Example of a request:

{

"header": {

"namespace": "SpeechTranscriber",

"name": "StopTranscription"

}

}Response parameters (header object):

| Parameter | Type | Description |

|---|---|---|

| namespace | String | The namespace to which the message belongs. SpeechTranscriber indicates real-time speech recognition |

| name | String | Message name. TranscriptionCompleted indicates that recognition is completed |

| status | Integer | Status code, indicating whether the request was successful, see service status codes |

| status_text | String | Status message |

| app_id | String | Application ID |

| task_id | String | The globally unique ID for the task; please record this value for troubleshooting |

| message_id | String | The ID for this message |

Return parameters (payload object): The format is the same as the SentenceEnd event, but the result and words fields may be empty.

Example of a response:

{

"header": {

"namespace": "SpeechTranscriber",

"name": "TranscriptionCompleted",

"status": "000000",

"status_text": "success",

"app_id": "2362af1b-7362-4e07-89c5-cc5ac0358b8a",

"task_id": "ef81e8a0-953b-4793-bf5b-f98323360936",

"message_id": "b272fe57-ec84-4b8d-b567-fdbffc0ca86a"

},

"payload": {

"index": 0,

"begin_time": 0,

"time": 0,

"speaker_id": "",

"result": "",

"words": []

}

}